We don't want to trace the ray through the real geometry, which would be expensive. Instead, it is computed what pixel it corresponds to from the G-buffer of the deferred shader. The data we need from the defered shader is: World position, normals and colors.

To do the actual ray tracing, an iterative algorithm is needed. The reflected vector is first computed, and then incrementally added to the world position of the point we start with. This is repeated as long as needed.

There are a couple of problems that limit the use of this technology:

- The reflected ray can point backward to the camera and hit the near cutting plane of the view frustum.

- The reflected ray can point sideways, and go outside of the screen.

- The reflected ray can hit a pixel that is hidden behind a near object.

- The increment used in the algorithm should be small to get a good resolution of the eventual target point, which will force the use of many iterations and high evaluation costs.

These all sound like severe limitations, but it turns out that wet surface reflection can still be done effectively:

- The Fresnel reflection has the effect of reflecting most for incoming light at large angles to the normal. That means that light reflecting backward toward the camera can be ignored.

- Wet surfaces are typically rough. This can be simulated with some random noise, which can hide the effect where some ray reflections couldn't be determined.

- The iteration can start out with a small delta, and increase it exponentially. That way, it can be possible to get good resolution on short distances, while still being possible to compute long distances.

The following is an example fragment shader to help calibrate the constants:

// Create a float value 0 to 1 into a color from red, through green and then blue.

vec4 rainbow(float x) {

float level = x * 2.0;

float r, g, b;

if (level <= 0) {

r = g = b = 0;

} else if (level <= 1) {

r = mix(1, 0, level);

g = mix(0, 1, level);

b = 0;

} else if (level > 1) {

r = 0;

g = mix(1, 0, level-1);

b = mix(0, 1, level-1);

}

return vec4(r, g, b, 1);

}

uniform sampler2D colTex; // Color texture sampler

uniform sampler2D posTex; // World position texture sampler

uniform sampler2D normalTex; // Normal texture sampler

in vec2 screen; // The screen position (0 to 1)

layout(location = 0) out vec4 color;

void main(void)

{

vec3 worldStartingPos = texture(posTex, screen).xyz;

vec3 normal = texture(normalTex, screen).xyz;

vec3 cameraToWorld = worldStartingPos.xyz - UBOCamera.xyz;

float cameraToWorldDist = length(cameraToWorld);

vec3 cameraToWorldNorm = normalize(cameraToWorld);

vec3 refl = normalize(reflect(cameraToWorldNorm, normal)); // This is the reflection vector

if (dot(refl, cameraToWorldNorm) < 0) {

// Ignore reflections going backwards towards the camera, indicate with white

color = vec4(1,1,1,1);

return;

}

vec3 newPos;

vec4 newScreen;

float i = 0;

vec3 rayTrace = worldStartingPos;

float currentWorldDist, rayDist;

float incr = 0.4;

do {

i += 0.05;

rayTrace += refl*incr;

incr *= 1.3;

newScreen = UBOProjectionviewMatrix * vec4(rayTrace, 1);

newScreen /= newScreen.w;

newPos = texture(posTex, newScreen.xy/2.0+0.5).xyz;

currentWorldDist = length(newPos.xyz - UBOCamera.xyz);

rayDist = length(rayTrace.xyz - UBOCamera.xyz);

if (newScreen.x > 1 || newScreen.x < -1 || newScreen.y > 1 || newScreen.y < -1 || newScreen.z > 1 || newScreen.z < -1 || i >= 1.0 || cameraToWorldDist > currentWorldDist) {

break; // This is a failure mode.

}

} while(rayDist < currentWorldDist);

if (cameraToWorldDist > currentWorldDist)

color = vec4(1,1,0,1); // Yellow indicates we found a pixel hidden behind another object

else if (newScreen.x > 1 || newScreen.x < -1 || newScreen.y > 1 || newScreen.y < -1)

color = vec4(0,0,0,1); // Black used for outside of screen

else if (newScreen.z > 1 && newScreen.z < -1)

color = vec4(1,1,1,1); // White outside of frustum

else

color = rainbow(i); // Encode number of iterations as a color. Red, then green and last blue

return;

}

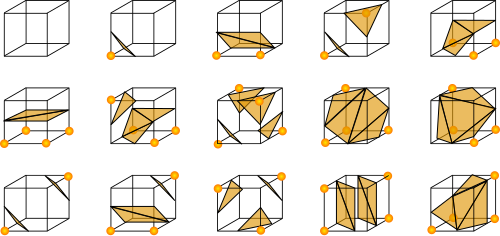

The example code will do at max 20 iterations (increment i with 0.05). Notice how the delta gradually is increased (incr is increased by 30% every iteration at line 50). Starting with a color texture as follows:

|

| Original |

|

| Calibration mode |

Notice that the single monster is reflected twice, at two different iterations. This is an effect of iterating in big steps.

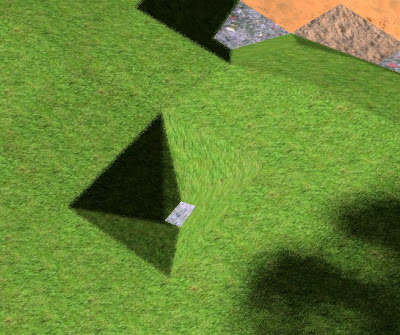

Next is a picture from inside a cave, with no reflections enabled:

|

| Caves without reflections |

|

| Caves with reflections |

// Create a float value 0 to 1 into a color from red, through green and then blue.

vec4 rainbow(float x) {

float level = x * 2.0;

float r, g, b;

if (level <= 0) {

r = g = b = 0;

} else if (level <= 1) {

r = mix(1, 0, level);

g = mix(0, 1, level);

b = 0;

} else if (level > 1) {

r = 0;

g = mix(1, 0, level-1);

b = mix(0, 1, level-1);

}

return vec4(r, g, b, 1);

}

// Return a random value between -1 and +1.

float noise(vec3 v) {

return snoise((v.xy+v.z)*10);

}

uniform sampler2D colTex; // Color texture sampler

uniform sampler2D posTex; // World position texture sampler

uniform sampler2D normalTex; // Normal texture sampler

uniform usampler2D materialTex; // Material texture sampler

in vec2 screen; // The screen position (0 to 1)

layout(location = 0) out vec4 color;

// #define CALIBRATE // Define this to get a color coded representation of number of needed iterations

void main(void)

{

vec4 origColor = texture(colTex, screen);

uint effectType = texture(materialTex, screen).r & 0xf; // High nibbles are used for modulating factor

if (effectType != 1) {

color = origColor;

return;

}

vec3 worldStartingPos = texture(posTex, screen).xyz;

vec3 normal = texture(normalTex, screen).xyz;

vec3 cameraToWorld = worldStartingPos.xyz - UBOCamera.xyz;

float cameraToWorldDist = length(cameraToWorld);

float scaleNormal = max(3.0, cameraToWorldDist*1.5);

#ifndef CALIBRATE

normal.x += noise(worldStartingPos)/scaleNormal;

normal.y += noise(worldStartingPos+100)/scaleNormal;

#endif

vec3 cameraToWorldNorm = normalize(cameraToWorld);

vec3 refl = normalize(reflect(cameraToWorldNorm, normal)); // This is the reflection vector

#ifdef CALIBRATE

if (dot(refl, cameraToWorldNorm) < 0) {

// Ignore reflections going backwards towards the camera, indicate with white

color = vec4(1,1,1,1);

return;

}

#endif

float cosAngle = abs(dot(normal, cameraToWorldNorm)); // Will be a value between 0 and 1

float fact = 1 - cosAngle;

fact = min(1, 1.38 - fact*fact);

#ifndef CALIBRATE

if (fact > 0.95) {

color = origColor;

return;

}

#endif // CALIBRATE

vec3 newPos;

vec4 newScreen;

float i = 0;

vec3 rayTrace = worldStartingPos;

float currentWorldDist, rayDist;

float incr = 0.4;

do {

i += 0.05;

rayTrace += refl*incr;

incr *= 1.3;

newScreen = UBOProjectionviewMatrix * vec4(rayTrace, 1);

newScreen /= newScreen.w;

newPos = texture(posTex, newScreen.xy/2.0+0.5).xyz;

currentWorldDist = length(newPos.xyz - UBOCamera.xyz);

rayDist = length(rayTrace.xyz - UBOCamera.xyz);

if (newScreen.x > 1 || newScreen.x < -1 || newScreen.y > 1 || newScreen.y < -1 || newScreen.z > 1 || newScreen.z < -1 || i >= 1.0 || cameraToWorldDist > currentWorldDist) {

fact = 1.0; // Ignore any reflection

break; // This is a failure mode.

}

} while(rayDist < currentWorldDist);

// } while(0);

#ifdef CALIBRATE

if (cameraToWorldDist > currentWorldDist)

color = vec4(1,1,0,1); // Yellow indicates we found a pixel hidden behind another object

else if (newScreen.x > 1 || newScreen.x < -1 || newScreen.y > 1 || newScreen.y < -1)

color = vec4(0,0,0,1); // Black used for outside of screen

else if (newScreen.z > 1 && newScreen.z < -1)

color = vec4(1,1,1,1); // White outside of frustum

else

color = rainbow(i); // Encode number of iterations as a color. Red, then green, and last blue.

return;

#endif

vec4 newColor = texture(colTex, newScreen.xy/2.0 + 0.5);

if (dot(refl, cameraToWorldNorm) < 0)

fact = 1.0; // Ignore reflections going backwards towards the camera

else if (newScreen.x > 1 || newScreen.x < -1 || newScreen.y > 1 || newScreen.y < -1)

fact = 1.0; // Falling outside of screen

else if (cameraToWorldDist > currentWorldDist)

fact = 1.0;

color = origColor*fact + newColor*(1-fact);

}

The source code for this can be found at the Ephenation ScreenSpaceReflection.glsl. The snoise function is a 2D simplex noise that can be found at Ephenation common.glsl.